Wrong Core Parameter on 8t WUs?

Message boards :

Number crunching :

Wrong Core Parameter on 8t WUs?

Message board moderation

| Author | Message |

|---|---|

|

Matthias Lehmkuhl Send message Joined: 7 Oct 11 Posts: 35 Credit: 3,310,980 RAC: 2,405

|

I got this WU which should run on 8 cores https://yafu.myfirewall.org/yafu/result.php?resultid=8012392 Projekt yafu Name yafu_ali_2136852_L134_C131_1652795706_2_2 Anwendung YAFU-8t 134.05 (8t) Arbeitspaketname yafu_ali_2136852_L134_C131_1652795706_2 Status Aktiv Erhalten 28.05.2022 09:59:02 Deadline 30.05.2022 19:53:32 ... Verzeichnis slots/4 but when I look at at the BoincTasks core usage I see only 1 core is used and the stderr.txt too tells that it's using only one thread 10:04:19 (14068): wrapper (7.5.26014): starting 10:04:19 (14068): wrapper: running yafu.exe (-threads 1 -batchfile in) And in Process Explorer to also saw only 1 process of ecm.exe was running while I checked it yesterday. Matthias |

yoyo_rkn yoyo_rknVolunteer moderator Project administrator Project developer Project tester Volunteer developer Volunteer tester Project scientist Send message Joined: 22 Aug 11 Posts: 787 Credit: 17,634,117 RAC: 0

|

It runs ecm on all cores. But sometimes one ecm runs a bit longer than the others. But this schould only be some minutes. Afterwards it starts the next ecm run on all cores. After the ecm runs yafu performes some consolidation od the ecm results. This runs also single threaded. Same for the NFS phase. It runs gnfs in parallel on all cores. The do not finish at the same time. Sometimes on of the gnfs runs needs a bit longer. After also tha last gnfs has finished new gnfs runs are performed on all cores. Also at the end the calculation of the final results is single threaded. yoyo . |

|

Matthias Lehmkuhl Send message Joined: 7 Oct 11 Posts: 35 Credit: 3,310,980 RAC: 2,405

|

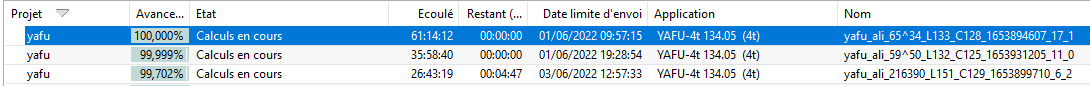

Hello yoyo, yes, that is the normal way yafu 8t is running. But this result was running only one ecm or is now running only one gnfs process each time and the remaining 7 cores are used for tasks of other projects instead yafu 8t is claiming them. Boinc is running on 8 cores/cpus and this is the actual cpu usage of the boinc projects while my yafu 8t task is running looks like the image Tag is not working for me, so here also as link to OneDrive https://1drv.ms/u/s!Apg6sr6l28yehnng4Pso4kpnDYpS?e=UGhl3g Matthias |

yoyo_rkn yoyo_rknVolunteer moderator Project administrator Project developer Project tester Volunteer developer Volunteer tester Project scientist Send message Joined: 22 Aug 11 Posts: 787 Credit: 17,634,117 RAC: 0

|

Dou you see the command line of yafu.exe somehow. Does it run with -threads 8? Some users fiddle with app_info to modify the applikation and forgot about this. Long time later they do not remember about it and wonder why it doesn't run as expected. Did you do so? If you don't know detach the project, ensure the project folder is deleted and reattach. yoyo . |

|

Matthias Lehmkuhl Send message Joined: 7 Oct 11 Posts: 35 Credit: 3,310,980 RAC: 2,405

|

I found the command line for this result "yafu.exe" -threads 1 -batchfile in The previous task on this machine was running normal with 8 cores based on stderr.txt from older result of this machine 12:27:39 (15320): wrapper: running yafu.exe (-threads 8 -batchfile in) https://yafu.myfirewall.org/yafu/result.php?resultid=8015475 and I don't have a app_info.xml for yafu in the project folder Matthias |

|

mmonnin Send message Joined: 21 Mar 17 Posts: 9 Credit: 29,115,150 RAC: 48,977

|

I agree. This PC has no app_config and project preferences I have it set to "Max # CPUs 8" yet several PCs are running single threaded unless I make an app_config to set something else. https://yafu.myfirewall.org/yafu/result.php?resultid=8009237 14:26:12 (72084): wrapper: running yafu (-threads 1 -batchfile in) CPU time is less than run time and nowhere close to 8x run time. |

yoyo_rkn yoyo_rknVolunteer moderator Project administrator Project developer Project tester Volunteer developer Volunteer tester Project scientist Send message Joined: 22 Aug 11 Posts: 787 Credit: 17,634,117 RAC: 0

|

I changed something in the configuration. Please check if newly downloaded wus are using now 8 threads. yoyo . |

|

mmonnin Send message Joined: 21 Mar 17 Posts: 9 Credit: 29,115,150 RAC: 48,977

|

I changed something in the configuration. Please check if newly downloaded wus are using now 8 threads. Yes one PC got 2 tasks in the last hour and they are both 8t according to BOINC. Some prior tasks have been using more CPU time than run time. Thanks. |

Beyond BeyondSend message Joined: 4 Oct 14 Posts: 36 Credit: 179,970,085 RAC: 66,332

|

I also have six 8t WUs and a 4t WU that are running way too long and show very low CPU usage. Some are sitting at 100% done. Delete them? |

yoyo_rkn yoyo_rknVolunteer moderator Project administrator Project developer Project tester Volunteer developer Volunteer tester Project scientist Send message Joined: 22 Aug 11 Posts: 787 Credit: 17,634,117 RAC: 0

|

Yes, abort them. . |

|

[AF>Le_Pommier] Jerome_C2005 Send message Joined: 22 Oct 13 Posts: 25 Credit: 11,092,247 RAC: 3,801

|

Since more than a week I have system issue with never ending tasks  since last week I have canceled other tasks with the same issue, this is running on a i5 8t (so how on earth could it decide to calculate 3 4t tasks ?), they all have *one* gnfs-lasieve (really) running during some time (more than 1 hour) then restarting another one, and loging rows into factor.log, ggnfs.log and (sometimes) stderr.txt so "it looks like they are not really dead" (I had some dead tasks in the past when nothing was written in any log for hours) but now I really don't know what do it, this is now reccurrent (I have canceled similar ones and others are behaving the same over and over). |

yoyo_rkn yoyo_rknVolunteer moderator Project administrator Project developer Project tester Volunteer developer Volunteer tester Project scientist Send message Joined: 22 Aug 11 Posts: 787 Credit: 17,634,117 RAC: 0

|

There was a configuration problem which let the workunits only use 1 thread. Therefore many 4t wurkunits are running in parallel. This was fixed, see above in this thread. Now 4t wus should use at least 4 threads. . |

|

[AF>Le_Pommier] Jerome_C2005 Send message Joined: 22 Oct 13 Posts: 25 Credit: 11,092,247 RAC: 3,801

|

The main question was more "how long shall I let them run" then the "too many" problem, especially if you consider each one was only running 1 thread. Now the 3rd one has terminated after 2 days 7 hours, but the other are still running with 81 and 46 hours of run... It seems the continue to update the log but with less frequency : more than 4 hours ago for both. Also now there is another YAFU v134.05 (mt) fully running (all thread) at the same time... So shall I try to let the 2 remaining 4t terminate ? |

|

mmonnin Send message Joined: 21 Mar 17 Posts: 9 Credit: 29,115,150 RAC: 48,977

|

As long as the stderr file is still being written to then I'd leave them running. With the single threaded tasks they can some times take days. |

|

[AF>Le_Pommier] Jerome_C2005 Send message Joined: 22 Oct 13 Posts: 25 Credit: 11,092,247 RAC: 3,801

|

Now the update ratio of the log files seems to be quite low, but still it looks like it is still doing something... only the stderr.txt seems to be updated more often, for both tasks 04/06/2022 07:52 - factor.log 04/06/2022 07:52 - ggnfs.log 31/05/2022 21:21 - session.log 04/06/2022 16:50 - stderr.txt 04/06/2022 07:52 - wrapper_checkpoint.txt 04/06/2022 06:55 - factor.log 04/06/2022 06:55 - ggnfs.log 02/06/2022 09:27 - session.log 04/06/2022 16:50 - stderr.txt 04/06/2022 06:55 - wrapper_checkpoint.txt and with deadlines that were on 01/06 and 03/06 will they still be considered if they ever return a result ? |

|

mmonnin Send message Joined: 21 Mar 17 Posts: 9 Credit: 29,115,150 RAC: 48,977

|

Now the update ratio of the log files seems to be quite low, but still it looks like it is still doing something... only the stderr.txt seems to be updated more often, for both tasks My client has some tasks with a deadline of June 4th but the project site says the 15th. |

|

[AF>Le_Pommier] Jerome_C2005 Send message Joined: 22 Oct 13 Posts: 25 Credit: 11,092,247 RAC: 3,801

|

Oh you are right I had not noticed the difference of deadline on my project account, what is June 1st and 3rd on my boinc manager is 11th and 13th here. However for both task the only file being updated from time to time is stderr.txt (4 hours ago for both) where it adds one "total yield" row, the 2 logs files have not been updated since yesterday morning (my previous screenshot is still valid for these). I really wonder if it's of any use to let these run, I'd like to see yoyo's opinion on this. |

yoyo_rkn yoyo_rknVolunteer moderator Project administrator Project developer Project tester Volunteer developer Volunteer tester Project scientist Send message Joined: 22 Aug 11 Posts: 787 Credit: 17,634,117 RAC: 0

|

The 4t gets task assigned, which are so heavy, that they need 4 cores to finish in 1-2 days. But a configuration problem on the server lets those workunits only use 1 core. The additional deadline, which is show on the server allows, that you deliver the results up to 10 days after the deadline and you still will get credits. So if you fine to NOT get credits for these wus, than you should abort them and you will get workunits which uses all cores. So back to normal processing. But if you stick to the credits (because the workunit is still running) you can let them run and hope that they will finish in those extra 10 days. yoyo . |

|

Matthias Lehmkuhl Send message Joined: 7 Oct 11 Posts: 35 Credit: 3,310,980 RAC: 2,405

|

For me the 10 additional days did work on my 8t task with 1 core running. The result finished yesterday in the additional time and no new sending of a result for this package was necessary. Matthias |

|

[AF>Le_Pommier] Jerome_C2005 Send message Joined: 22 Oct 13 Posts: 25 Credit: 11,092,247 RAC: 3,801

|

The first task did terminated and got (modestly) credited after more than 4 days of calculation. The second one (4t task) is still running (and has some "extra deadline" to finish yet) but suddenly at some point it decided it should run on 8 available threads (it is a 4t !!!) --> I see 8 gnfs-lasieve process now running in memory And I know these processes do correspond to *this* running task because - the slot contains work files dated 01/06, 02/06... and in the log files I see references from 01/06 onwards - the other 2 tasks sent to me on 5 and 6 June are still pending to run and have 0 crunch time (and not even a slot directory assigned). Strange isn't it ? |

Datenschutz / Privacy Copyright © 2011-2025 Rechenkraft.net e.V. & yoyo